All I can really say about the appointment at my kid’s allergist is that it occurred. We waited weeks to get in, got some tests, received a diagnosis and a treatment plan, had a weird insurance thing that wasted our time. American healthcare took place.

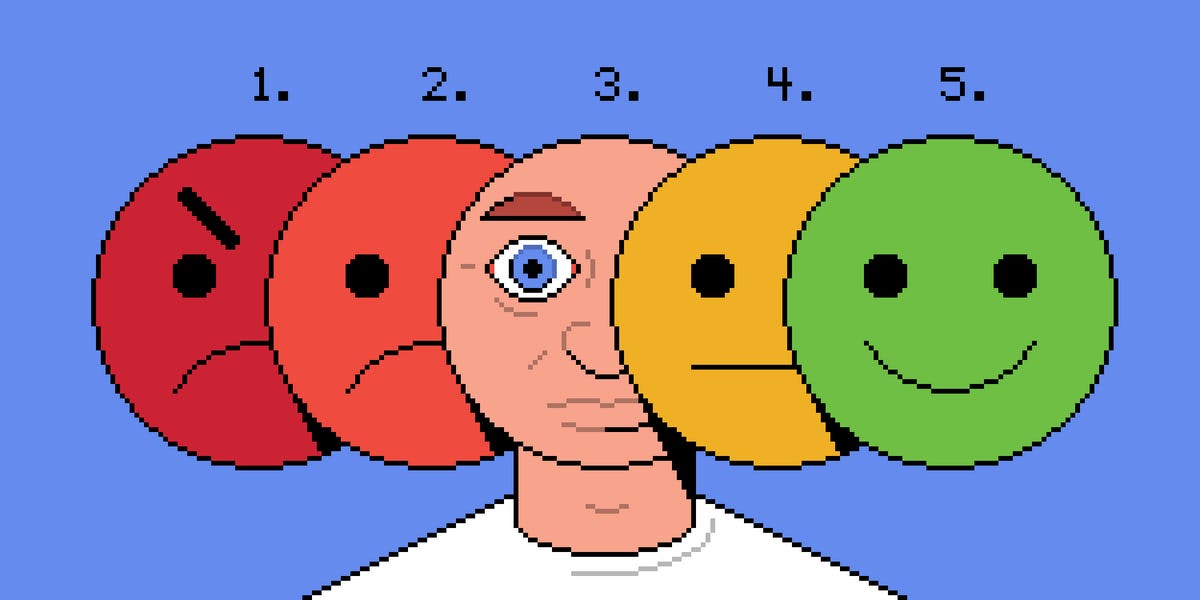

The email contained the usual set of questions. How would I rate the service I received? How likely was I to recommend them to a friend? But I’ve gotta say, getting asked how satisfied I was with the care provided by a pediatric allergist was baffling to me. My child received necessary medical treatment at a speed commensurate with its urgency. It was fine. What aspect of it could I possibly evaluate? I don’t need to express an opinion about the chairs in the waiting room.

The whole thing vexed me enough that I started to really notice customer satisfaction surveys — and, as I’m sure you’ve seen, they are everywhere. It seems like every interaction I have with a money-involving organization also comes with a polite request for my feedback. A restaurant. A hotel. A shop. The insurance company that wasted my time. Every time I buy something or interact with someone: another survey. While I was pitching this story to my editor, his email dinged. A survey! How’d we do? How long was your wait time? How satisfied were you with the knowledge and professionalism of the salesperson who served you?

Most of the time I’m not asked to evaluate the quality of a product or service. I’m asked to evaluate the experience, the meta-consumption that drives our hyperactive service economy. A tsunami of surveys has turned us all into optimization analysts for multibillion-dollar companies. Bad enough I’m providing free labor to help a transnational corporation improve its share price or “evaluate” a low-paid, overworked, nonunion employee. It’s more than annoying. I’m starting to suspect it’s unethical.

This isn’t just my imagination. We’re all getting more requests for feedback. Global spending on market research has doubled since 2016, to more than $80 billion a year. More than half of that money is doled out in the United States, and a fifth of it — $16 billion! — is devoted to customer surveys.

Consider the experience of Qualtrics, one of the largest survey-data companies. In the past year, the firm has analyzed 1.6 billion survey responses. That’s a 4% increase over the prior year — and responses for the first quarter of 2024 were 10% above what Qualtrics projected. Its analysis of “non-structured data,” which is to say customer-service phone calls and online chatter, hit 2 billion conversations last year. This year the company projects an increase of 62%.

Why are there suddenly so many surveys? Because people have so many options today that they’re not bothering to complain when something sucks. They just move on to a different, equally accessible website. A company pisses them off or disappoints them, and poof! They’re gone.

“When a customer has a poor experience, 10% fewer of them are telling the company about it than they did in 2021,” says Brad Anderson, the president of product and engineering at Qualtrics. “What’s happening is they’re just switching.” So companies are using surveys in a bid to hang on to those unloyal customers. After all, it’s way more expensive to acquire a new customer than keep an old one.

The tricky part is marketing research has shown that the objective quality of a product, its nominal goodness, matters less than whether it meets customer expectations. “Quality,” as one research paper put it, “is what the customer says it is.” Customer satisfaction correlates with profitability, with share price, with success.

Now, to get all philosophical for a moment, what even is satisfaction, anyway? People tried for decades to figure that out. Then, in 2004, a Bain consultant named Fred Reichheld came up with an answer. He called it the Net Promoter Score.

Before I tell you what that is, let me ask you a question: On a scale of 1 to 10, how likely would you be to recommend this article to someone else?

That’s it. That’s what the Net Promoter Score does. If you’d recommend something to someone else, it has by definition satisfied you. Mystery solved.

The NPS came along at the same time as the widening use of the internet and social media, which made it very easy to ask about. Phone calls, snail mail — that stuff is time-consuming and expensive. But surveys sent via email and text are fast and cheap.

“People don’t choose based on objective quality anymore,” says one marketing expert.

In American marketing, NPS became an unstoppable craze. Other metrics followed: the Customer Satisfaction Score, the Customer Effort Score, measurements of the entire Customer Experience. A survey, or monitoring calls to customer service, could reveal loyalty, intent to buy again, the specific parts of the “customer journey” that were most pleasant. “People don’t choose based on objective quality anymore,” says Nick Lee, a marketing professor at the Warwick Business School. “Value is added by way more than what we would call objective product features.”

At the peak of the so-called sharing economy, customer surveys were all-powerful. They went both ways: Suddenly, Uber drivers and Uber riders both had star ratings to care about. Customer surveys were going to fix asymmetrical marketplace information. But of course, the whole thing was frothier than a five-star milkshake. By the late 2010s it was becoming clear that all those reviews and ratings were getting less useful over time. They were subject, it turned out, to “reputation inflation.” Eventually everything gets four stars out of five.

The glut of customer surveys has created an additional problem for marketers. Email surveys are like the robocalls of old: You hit delete without even looking at them. “People receive so many survey requests that they’re more likely to refuse to participate in any survey,” says James Wagner, a researcher at the University of Michigan’s Institute for Social Research. It’s called oversurveying, and it makes people less likely to respond. Which means that, for statistical validity, companies have to send out more surveys. Which lowers the response rate even further, which means that companies have to send out yet more surveys, in a never-ending doom loop. On a scale of 1 to 5, customer satisfaction with customer-satisfaction surveys is headed to zero.

In reality, nobody’s even sure these surveys are measuring the right thing. “Companies regularly collect customer-satisfaction measures, Net Promoter Scores, things like that,” says Christine Moorman, a business-administration professor at Duke University who heads up a semiannual survey of hundreds of chief marketing officers. “But then the question is what do they do with it, and to what strategic ends? Most of them are doing it out of habit, not because they’re thinking about the larger strategic questions they have.”

Big survey companies don’t just dump a giant Excel spreadsheet on their clients and send them an invoice. They offer sophisticated analyses of the data they collect. But unless those numbers are tied to possible changes the client might make, what’s the point? “It’s a huge arms race,” says Lee, the Warwick marketing prof. “If you can give me more data rather than less data, I want more data. But the business model as to whether that data is valuable, it’s sometimes questionable. Because people don’t know what to do with the data, and they let the agency tell them what it says.” Just because a company gets a bunch of survey results doesn’t mean it knows what to do with them.

Customer surveys aren’t just bad for companies. After reading the copious research on how surveys are actually used, I’ve come to the conclusion that they’re even worse for us, the oversurveyed customers.

Any time a scientist wants to do research involving humans, it’s a whole thing. That always comes with risks, from exposing people to an untested drug to simply wasting their time. To get approved by an Institutional Review Board, the potential results have to be worth the risks, to provide some benefit to humanity. That’s called “equipoise.” And if a proposed experiment on living things doesn’t have it, you ain’t supposed to do the experiment.

Perhaps customer surveys should be evaluated for “equipoise.” What if they’re only being used to discipline or fire employees?

Perhaps customer surveys should be evaluated for equipoise. If the companies actually use the data to improve a product or experience, that’s good for us subjects. But what if it’s used only to improve the company’s share value or profitability? Or to discipline or fire employees? That only helps the company. And that doesn’t even take into account whether I, the surveyed one, gave my consent for data I provided to be used in that way — a key to ethical research.

“Maybe we should have to pre-tell people what we’re going to do with the data before we get it,” Lee says. “That would be a way to stop companies from doing it indiscriminately.” But he knows that’s a nonstarter. “We’d be adding bureaucracy into the system. Never a popular thing to do with companies.”

Worse, for vast swaths of services, you and I are the last people anyone should be soliciting opinions from. Things like doctor visits, legal services, or school classes are “pretty hard for the user to evaluate,” Lee says. “We ask for customer feedback on these things all the time, but it’s hard for a customer to give you immediate feedback, because a customer doesn’t know what quality is yet.” The college class you hated because it was hard, and at 8 a.m., might turn out to be your favorite academic memory and the foundation for your professional skill set 15 years later. Whether a visit to the mechanic was pleasant doesn’t tell you how well they fixed your car. You have to drive around with your new drive shaft awhile to know whether you got shafted.

Lee has unpublished data, which hasn’t been peer-reviewed, comparing hospital performance in Britain’s National Health Service with surveys of both patients and employees. “It’s not surprising that the best hospitals have the best patient feedback and best worker feedback,” he says. But what is surprising is that worker feedback, not customer responses, correlates most closely with quality. Users, it turns out, aren’t very good at telling what’s what.

You know what is good at sorting through tons of data? Artificial intelligence. As email surveys get lower and lower response rates, consumer marketing companies have begun to tout their acumen at applying AI to the unstructured verbiage of online reviews, social-media posts, and call-center transcripts. Maybe these new tools, based on large language models, will be able to coax better responses from oversurveyed consumers. “It’s the ability to be able to detect when there’s a low-quality answer and come back and ask the customer for more data,” Anderson says. “When we ask the second question, 40% of the time the customer engages and provides more data. The number of syllables in the second response increases by 9x.”

Now, if I get a callback from a customer-survey robot, there’s a good chance most of those additional syllables will be profane. How will I rate my experience getting interviewed by an AI? It might get more actionable data out of me than that email from my kid’s allergist did. But I’m pretty sure I won’t recommend it to a friend.

Adam Rogers is a senior correspondent at Business Insider.